Do you want to optimize your Magento or Adobe Commerce robots.txt file?

Don’t know how and why the robots.txt file is important for your SEO? We have an answer. In this Robots.txt file special article, we will show you how to optimize your Magento or Magento2 robots.txt for SEO and help you understand the significance of the robots.txt file.

First, let’s find out, what the Robots.txt file is?

The robots exclusion standard, also known as the robots exclusion protocol or simply called robots.txt file plays an important role in your site’s overall SEO performance.

Robots.txt file allows you to communicate with search engines and let them know which parts of your site they should index and which areas of the website should not be processed or scanned.

Do we need the Robots.txt file?

The robots.txt file is one of the leading ways of telling a search engine where it can go on your website and where can not.

It is a text file present in the root directory of a website. The absence of a robots.txt file will not stop search engines from crawling and indexing your website. However, it is highly recommended that you create one.

It looks so basic but improper use of the robots.txt file can hurt the ranking and harm your website hard, this saves the website from duplicate data, we tried to cover all the uses of the robots.txt file for your website.

Robots.txt files are useful in many ways :

-If you don’t want search engines to index your internal search results pages.

-If you want search engines to ignore any duplicate pages on your website.

-If you don’t want search engines to index certain files on your website (images, PDFs, etc.)

-The robots.txt file controls how search engine spiders see and interact with your web pages.

-This file, and the bots they interact with, are fundamental parts of how search engines work.

-If you don’t want search engines to index certain areas of your website or a whole website.

-If you want to tell search engines where your sitemap is located.

Creation of a robots.txt file :

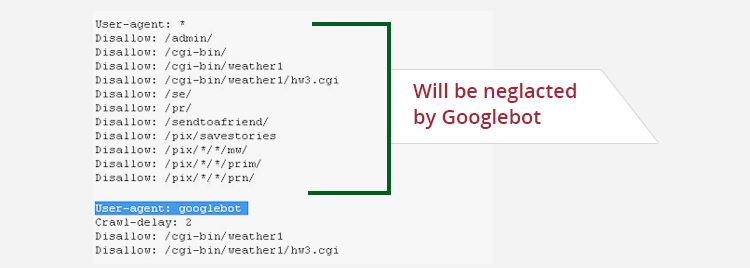

To make a robots.txt file, you need access to the root of your domain. The simplest robots.txt file uses two keywords, User-agent and Disallow.

User-agents are search engine robots (or web crawler software); Disallow is a command for the user-agent that tells it not to access a particular URL.

On the other side, to give Google access to a particular URL that is a child directory in a disallowed parent directory, then you can use the third keyword, Allow.

The syntax for using the keywords is as follows:

User-agent: [the name of the robot the following rule applies to]

Disallow: [the URL path you want to block]

Allow: [the URL path in of a sub-directory, within a blocked parent directory, that you want to unblock]

These two lines are together considered a single entry in the file, where the Disallow rule only applies to the user-agent(s) specified above it.

You can include as many entries as you want, and multiple Disallow lines can apply to multiple user-agents, all in one entry. You can set the User-agent command to apply to all web crawlers by listing an asterisk (*) as in the example below:

User-agent: *

The above code prevents the search engines from indexing any pages or files on the website. Say, however, that you simply want to keep search engines out of the folder that contains your administrative control panel.

Private links like Login ID, Checkout Page, Server Settings, Cart Page, Private information- account number, PINs, photographs, etc should be disallowed.

We highly recommend that if you do not have a robots.txt file on your site, then you immediately create one !!

2 comments

Thanks for your time.